| The C++Course provides a general introduction to programming in C++. It is based on A.B. Downey's book, How to Think Like a Computer Scientist. Click here for details. |

|

Home  Heap Heap  Analysis of Mergesort Analysis of Mergesort |

||

| See also: Mergesort, Heapsort | ||

|

||

Analysis of Mergesort

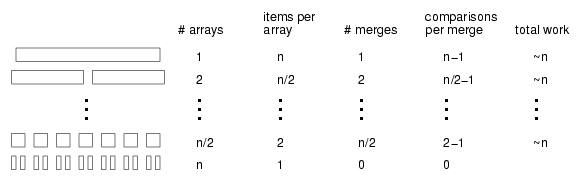

In Section 13.10 I claimed that mergesort takes time that is proportional to n log n, but I didn't explain how or why. Now I will. Again, we start by looking at pseudocode for the algorithm. For mergesort, it's mergeSort (array) {// find the midpoint of the array // divide the array into two halves // sort the halves recursively // merge the two halves and return the result } At each level of the recursion, we split the array in half, make two recursive calls, and then merge the halves. Graphically, the process looks like this:

Each line in the diagram is a level of the recursion. At the top, a single array divides into two halves. At the bottom, n arrays (with one element each) are merged into n/2 arrays (with 2 elements each). The first two columns of the table show the number of arrays at each level and the number of items in each array. The third column shows the number of merges that take place at each level of recursion. The next column is the one that takes the most thought: it shows the number of comparisons each merge performs. If you look at the pseudocode (or your implementation) of merge, you should convince yourself that in the worst case it takes m-1 comparisons, where m is the total number items being merged. The next step is to multiply the number of merges at each level by the amount of work (comparisons) per merge. The result is the total work at each level. At this point we take advantage of a small trick. We know that in the end we are only interested in the leading-order term in the result, so we can go ahead and ignore the -1 term in the comparisons per merge. If we do that, then the total work at each level is simply n. Next we need to know the number of levels as a function of n. Well, we start with an array of n items and divide it in half until it gets to 1. That's the same as starting at 1 and multiplying by 2 until we get to n. In other words, we want to know how many times we have to multiply 2 by itself before we get to n. The answer is that the number of levels, l, is the logarithm, base 2, of n. Finally, we multiply the amount of work per level, n, by the number of levels, log2 n to get n log2 n, as promised. There isn't a good name for this functional form; most of the time people just say, "en log en." It might not be obvious at first that n log2 n is better than n2, but for large values of n, it is. As an exercise, write a program that prints n log2 n and n2 for a range of values of n.

|

||

Home  Heap Heap  Analysis of Mergesort Analysis of Mergesort |

||

Last Update: 2005-11-21